Art is the imposing of a pattern on experience, and our aesthetic enjoyment in recognition of the pattern.Much of today’s well-crafted software is designed with what are called “design patterns.” Inspired by the architectural writings of Christopher Alexander, software design patterns make computer programs more flexible and adaptable to change. As opposed to programs composed of thousands of interdependent lines of code, where a small modification to a single code may force changes throughout the rest of the program, software designed with patterns allows chunks of code, or modules, to be modified or even replaced without requiring changes to the remainder of the program. Modules become interchangeable parts representing, perhaps, solutions that can be replaced by other solutions as the program evolves and meets new challenges.

Alfred North Whitehead

A number of common software design patterns have been cataloged into a ”language of patterns” that are shared by developers around the world, the same patterns solving similar problems in applications as varied as defense systems and retail sales. While the primary advantage of these design patterns is that their use makes software modules independent of each other, there are many different patterns that serve to solve different problems that arise throughout the software industry.

Occasionally, a particular design pattern fits a certain problem space so well that it has the potential to dominate that problem space. An example of this kind of pattern is application of the so-called “Composite Pattern” in the area of financial information. The Composite Pattern, in its very simple manifestation, generates from the primitive data of recorded bookkeeping records all of the information that is produced by a fully-staffed corporate financial department. It simplifies the whole process of accounting to the point where, once it is implemented in the corporate software, any layman would be able to generate the high-level information produced by the aforementioned accounting department.

How this done is detailed in my book, The Tao of Financial Information, however, a brief introduction can be presented here. First, let’s take a look at what the Composite Pattern is. According to Wikipedia:

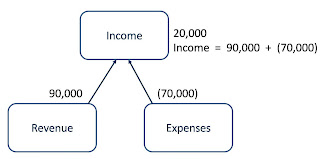

The intent of composite is to compose objects into tree structures to represent part-whole hierarchies. Composite lets clients treat individual objects and compositions uniformly.Briefly stated, the Composite Pattern is a hierarchical tree of objects where higher objects in the hierarchy are “composed” of parts that are represented below them in the hierarchy. For example, as shown in the diagram above, the concept of Income that is so important to the business world is a composite of its parts, Revenue and Expenses.

(see http://en.wikipedia.org/wiki/Composite_pattern)

What is so valuable about this rather obvious observation is that, as we can see, the weight of the higher object, Income, is a sum of the weight of its parts, the Revenue and Expenses parts. The parts are summed because Revenue and Expenses have opposing balances (Revenue is typically a credit balance and the Expenses typically have a debit balance, as reflected by the numeric signs).

In the same manner, we can place all of the major concepts in the financial solution space into an expanded form of this hierarchical tree, with each higher level concept having a balance that is the sum of its parts. This simplifies financial information into a single pattern or data structure of computer science, allowing a simple program to generate all the financial numbers automatically as the objects in the tree are updated on a real-time basis.